Everything Revealed at Google I/O 2024: Gemini 1.5 Pro, Project Astra, and Many AI Innovations

Google held its I/O 2024 developer conference to showcase all the new features of its technologies, just a week after Apple's launch of the new iPad Pro and iPad Air, and a day after OpenAI's unveiling of the GPT-4o language model.

Right from the start, the words "artificial intelligence" were mentioned, and with them, the company's advancements in the AI field were reviewed. The first announcement of the evening was that AI Overviews is coming to the United States and other countries, to provide more relevant information when performing certain searches on the search engine. Here's everything that was presented at Google I/O 2024.

Gemini and Gemini 1.5 flash

Once on stage, Sundar Pichai announced that Gemini will enhance the Photos app to make finding photos easier with the introduction of Ask Photos, a feature that allows queries to Google Photos using natural language. Gemini 1.5 Pro arrives with greater reasoning capacity and is available globally to all developers, and it boasts an improvement in token capacity, with a maximum of 2 million.

We also saw new features for Google Workspace, as Gemini is integrated into Gmail and other applications like Google Meet, which will record conversations and highlight the most important parts. They demonstrated NotebookLM with Gemini 1.5 Pro, highlighting significant improvements in the voice generation system, which is now much more natural and human-like.

Demis Hassabis, co-founder of DeepMind, announced Gemini 1.5 Flash, a lighter model focused on the speed and efficiency of Google's most advanced model, aimed at offering the least latency. It is now available to all developers through AI Studio.

Introducing Project Astra

One of the big announcements at the event was the introduction of Project Astra, described as an AI agent that promises to be the future of virtual assistants for daily life utility. Based on Gemini, it has the ability to identify what's around us using the smartphone camera and respond to queries naturally.

It helps understand multimodal information and reduce response times. In a video shown at the event, a user interacts with the AI model without looking at the phone. It can also solve mathematical problems. For now, it is under development.

Project Astra is a prototype from @GoogleDeepMind exploring how a universal AI agent can be truly helpful in everyday life. Watch our prototype in action in two parts, each captured in a single take, in real time ↓ #GoogleIO pic.twitter.com/uMEjIJpsjO

— Google (@Google) May 14, 2024

Content Creation Innovations

The tech company has presented significant advancements in content generation aspects. Specifically, we are talking about Veo, Music AI Sandbox, and Imagen 3. Veo is a new video generation model, capable of transforming text into 1080p resolution video. It is available in a new tool called VeoFX.

Music AI Sandbox is a music generation tool, developed in collaboration with some popular artists, while Imagen 3 is an improved version of its image generation model, now available in ImageFX.

AI Innovations in Google Search

Gemini will be introduced into the Google search engine to perform searches for us, and they claim it has the three necessary pillars to offer the best search: the ability to track information in real-time, quality systems, and the power of Gemini.

In the event, they show an example of asking Gemini for the best places to practice yoga and pilates, and the assistant works for us to find the best result. It can also plan an event. The goal is to let Google do all the work for you. Additionally, they claim that the arrival of generative AI in the search engine will be a game-changer.

Gemini's multimodal system allows us to capture voice and video input to provide answers, meaning if we have any gadget that isn't working, we can simply record a video of the gadget and ask for its solution.

This is Search in the Gemini era. #GoogleIO pic.twitter.com/JxldNjbqyn

— Google (@Google) May 14, 2024

Gemini Innovations in Google Workspace

The most notable is that Gemini will be available in the sidebar of Workspace tools for all users starting next month. Google's email will have a button with the AI icon that will allow us to quickly generate a summary.

It will also offer recommended actions such as helping us organize receipts. Gemini will provide assistance in Google Sheets to analyze and segment data. This will be available experimentally starting in September.

Users will be able to create their own AI Teammate companions so everyone can see conversation participants' responses and interact with it, as Chip, the assistant, can provide answers regarding all the documents it has access to.

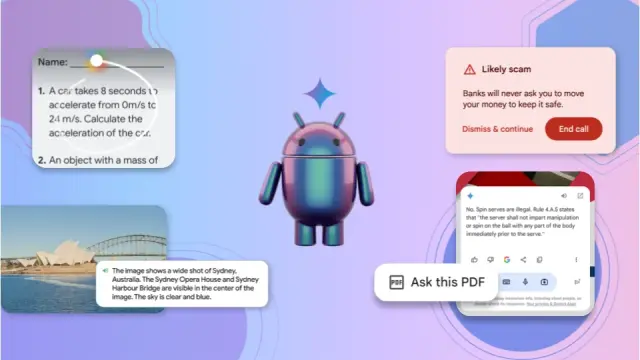

Gemini on Mobile Devices

‘Circle to search’ will be available to more users and will introduce major improvements. One of them will be Gemini Life, with which we can have conversations in natural language, even interrupting the assistant while it responds. Additionally, support will be added for real-time visualization of what is happening around us thanks to the camera.

They will also receive the Gems feature, which are customized chatbot versions that adapt to the needs described by the user. Those subscribed to Gemini Advanced will have a wider context window and can upload a 500-page PDF, hour-long videos, or up to 30,000 lines of code.

If we are watching a video on YouTube and Gemini is required, it can make inquiries about the video we are watching. Both on YouTube and any video or photo platform, it will not be necessary to open a separate application to use Gemini, as it will develop in a floating window. All of this translates to a better experience.

Regarding Gemini Nano, it will be multimodal on Pixel devices, meaning it will understand more information in other formats like images, sounds, and voice, and will not need the Internet. We will also see updates for calls, as if someone tries to scam us, the phone will be able to detect it with a warning thanks to Gemini.

And you’ll also be able to ask questions with video, right in Search. Coming soon. #GoogleIO pic.twitter.com/zFVu8yOWI1

— Google (@Google) May 14, 2024