ChatGPT Vulnerability: Millions of Unprotected Conversations on macOS

The desktop application of ChatGPT for Mac was storing users' conversations without encryption and in an insecure location on the computer. This made it possible for any malicious person, through a virus, to access users' interactions with the chatbot.

This global flaw was revealed by data and electronics engineer Pedro José Pereira Vieito on X, who pointed out that chats were stored in the following Mac location: unprotected location:

~/Library/Application\ Support/com.openai.chat/conversations-{uuid}/

The OpenAI ChatGPT app on macOS is not sandboxed and stores all the conversations in **plain-text** in a non-protected location:

— Pedro José Pereira Vieito (@pvieito) July 2, 2024

~/Library/Application\ Support/com.openai.chat/conversations-{uuid}/

So basically any other app / malware can read all your ChatGPT conversations: pic.twitter.com/IqtNUOSql7

"So basically any other app / malware can read all your ChatGPT conversations:" explained the engineer on .

Pereira also explained that he discovered this privacy error because he was curious that the ChatGPT application for Mac was not found in the app store for the computer, only on the OpenAI website.

When the artificial intelligence company learned of the flaw, it updated the application by adding encryption to the conversations.

In the same post, but posted on Threads, in which there was more interaction Andreas Müller indicated: "That’s a problem of macOS. My os should prevent such situations". Pereira responded that since macOS Mojave 10.14, users must explicitly reject or accept the processing of personal data in applications.

"macOS has blocked unauthorized access to any user private data since macOS Mojave 10.14 (6 years ago!). Any app accessing private user data (Calendar, Contacts, Mail, Photos, the Documents & Desktop folders, any third-party app sandbox, etc.) now requires explicit user access. OpenAI chose to opt-out of the sandbox and store the conversations in plain text in a non-protected location, disabling all of these built-in defenses." Pereira said.

"Sandbox" refers to an isolated and controlled security environment where applications can run safely and restrictedly. This environment limits applications' access to critical system resources, such as sensitive files or operating system configurations.

What It Means for a Chat Not to Be Encrypted

When a chat is not encrypted, it means that the conversations sent and received through that platform or application are not protected through an encryption process.

Encryption is a security method that converts data into an unreadable or encrypted format as it travels over the network so that only the authorized sender and receiver can understand the content using a decryption key.

For this reason, anyone could read the conversations a user has on ChatGPT.

How OpenAI Trains ChatGPT

OpenAI, the entity behind ChatGPT, uses the information provided by users to feed its artificial intelligence system. According to the company, this strategy helps "perfect the model," leaving it to each user to decide whether they want to contribute to this process.

"We retain certain data from your interactions with us, but we take steps to reduce the amount of personal information in our training datasets before they are used to improve and train our models," the company explains in its policy and privacy page. However, the text does not specify what concrete information it keeps for its system.

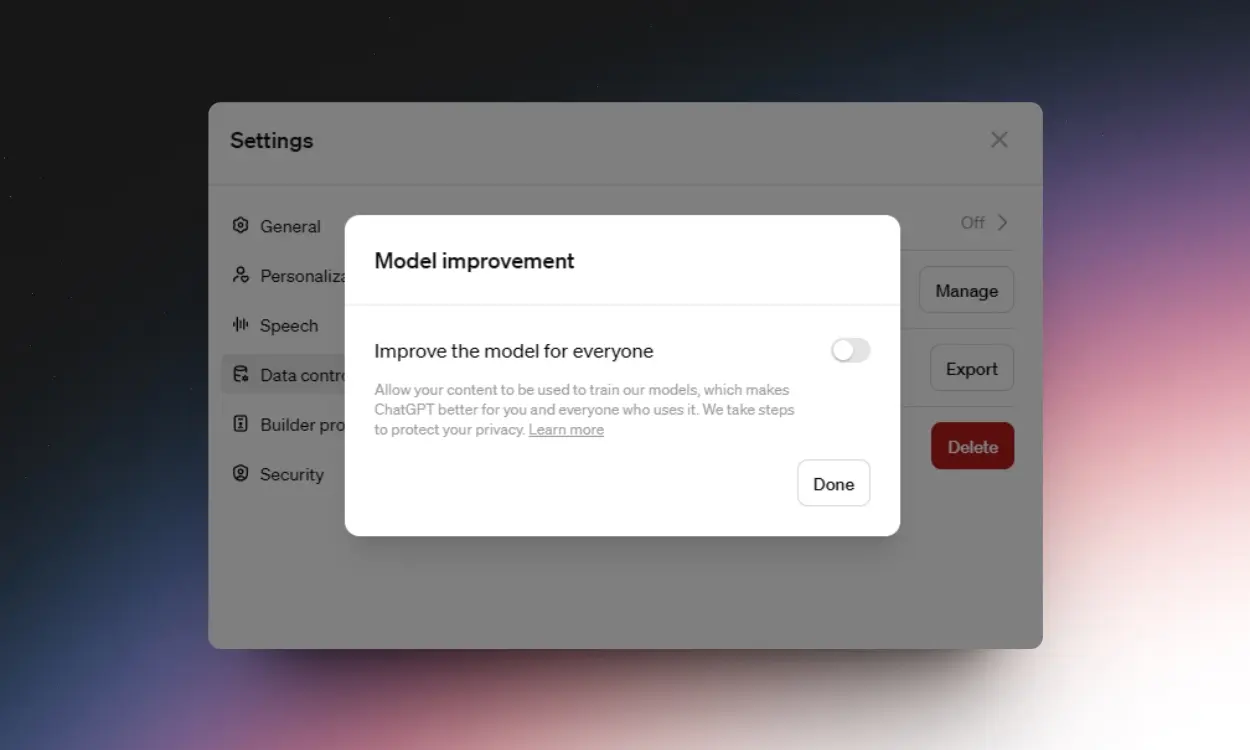

Through a few simple steps, users have the opportunity to manage this setting to keep their data private.

How to Prevent OpenAI from Using My Data to Train ChatGPT

- Log in to ChatGPT.

- Go to the top right corner, select the user's photo, and go to Settings.

- Select 'Data Controls.'

- Go to the 'Improve the model for everyone' section and disable this option.

- Click 'Done.'

OpenAI explains that the 'Improve the model for everyone' option allows user content to be used to train their models. However, the decision to contribute personal data for the development of artificial intelligence is individual and depends on each user's choice.